Raptor bills itself as a new Ruby “app server” and it claims to blow everything else out of the water performance-wise (by between 2-4x!) whether that’s Unicorn, Puma, Passenger, or even TorqueBox on JRuby. The bad news for now is there’s no source or repo yet and only a handful of people (including me) have been given a sneak peek, although a public beta is promised on November 25th.

The history of Ruby webapp deployment

The deployment of Ruby (and therefore Rails) webapps was a painful mess for years, a state I lamented 7 years ago in No True ‘mod_ruby’ is Damaging Ruby’s Viability on the Web. Read More

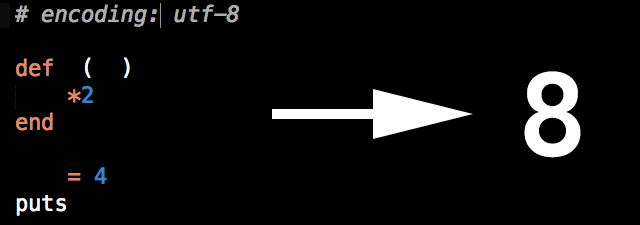

In math, a

In math, a  This is a guest post by

This is a guest post by